With a new NSF grant, computer scientists are developing a precision flood prediction system that pushes the boundaries of the young field of geometric deep learning. A new grant to researchers at UAB and the University of Florida from the National Science Foundation is funding an innovative study aimed at precision flood prediction. The work also focuses on pushing the boundaries of the young field of geometric deep learning, which promises to translate the successes of artificial intelligence and machine learning from “flat” problems into the three dimensions of the actual world. Advances here could lead to better route recommendations in navigation apps as well as new breakthroughs in drug discovery and development of novel, energy-efficient materials.

With a new NSF grant, computer scientists are developing a precision flood prediction system that pushes the boundaries of the young field of geometric deep learning. A new grant to researchers at UAB and the University of Florida from the National Science Foundation is funding an innovative study aimed at precision flood prediction. The work also focuses on pushing the boundaries of the young field of geometric deep learning, which promises to translate the successes of artificial intelligence and machine learning from “flat” problems into the three dimensions of the actual world. Advances here could lead to better route recommendations in navigation apps as well as new breakthroughs in drug discovery and development of novel, energy-efficient materials.

UAB’s Da Yan, Ph.D., assistant professor in the Department of Computer Science, received a $238,000 grant from the NSF’s Office of Advanced Cyberinfrastructure as part of the project Large-Scale Spatial Machine Learning for 3D Surface Topology in Hydrological Applications.

Yan and his collaborator, Zhe Jiang, Ph.D. — formerly of the University of Alabama and now at the University of Florida’s Center for Coastal Solutions — have already partnered on several preliminary projects that have taught computers to make real-world predictions based on limited data extrapolated onto 3D maps of the Earth. With their new, NSF-funded project, Yan and Jiang are seeking to translate their advances to massive scale.

Da Yan, Ph.D., assistant professor in the Department of Computer Science“In recent years, the availability of vast amounts of Earth imagery data with elevation information has caught my attention,” Yan said. Over the past decade, he has developed new ways to query and mine geospatial data to find optimal routes on road networks, improve traffic modeling and transportation simulations, and predict rainfall.

Da Yan, Ph.D., assistant professor in the Department of Computer Science“In recent years, the availability of vast amounts of Earth imagery data with elevation information has caught my attention,” Yan said. Over the past decade, he has developed new ways to query and mine geospatial data to find optimal routes on road networks, improve traffic modeling and transportation simulations, and predict rainfall.

What these projects have in common is the need to incorporate the physical constraints of the real world into machine learning models that are powerful but often extraordinarily brittle. Deep learning systems capable of identifying cats in millions of internet photos can be stumped when the pixels in these photos are shifted by minute amounts.

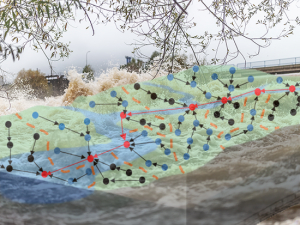

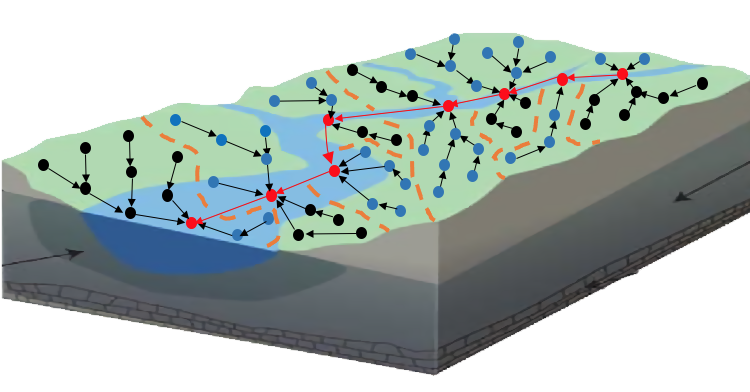

But a “physics-aware” model with a built-in understanding of our planet’s geometry can avoid these pitfalls — and grasp that, when it comes to water, what is up must come down. Topology is the branch of geometry that studies the properties of spaces. In the case of Yan and Jiang’s project, that means creating a machine learning model that knows that water collects at low points and runs off from high points. The goal is to train a system that takes as input the limited information available in the case of a catastrophic flood to extrapolate on a large scale which areas will be under water and which are dry. This is crucial information for first responders trying to reach the disaster area, plan the best routes to evacuate trapped residents or determine the spots that need help soonest.

Image courtesy Da Yan, Ph.D.

Image courtesy Da Yan, Ph.D.

Creating computer models that can reach this goal involves more than simple tweaks to existing systems, Yan explains. “Spatial machine learning is unique in that the 2D or 3D structures are present and should be specially processed,” he said. “And that requires special, tailor-made model design.”

It also requires finding the fastest, most efficient way to funnel masses of data through the system. This is Yan’s major role in the project. “I am developing solutions to ensure our models can scale to very large data volumes with high processing efficiency, using techniques such as parallel and distributed computing and effective data organization and indexing,” he said. Several years ago, Yan developed T-thinker, which tackles compute-intensive Big Data problems by effectively dividing up the task among a computer’s available CPU core chips. T-thinker was named a Great Innovative Idea at the Computing Community Consortium’s Early Career Researcher Symposium in 2018.

For the NSF project, Yan’s proposed parallel learning framework is expected to speed up the model’s inference time by two orders of magnitude on a moderate-sized computer cluster.

The proposed system will be deployed for real-world rapid flood disaster response, Yan says. It also will be used to validate and calibrate the National Oceanic and Atmospheric Administration’s National Water Model, launched in 2016 to improve the government’s ability to forecast flooding across the country.

Mapping new careers for Alabama high schoolers

Students in Alabama high schools will benefit through Yan’s NSF grant, he notes. “High school students can get experience in UAB labs to visualize Earth imagery data, work with various Earth imagery data formats and apply spatial machine learning models,” Yan said. And student projects made possible through the grant will be entered in regional science fairs.