Written by Brian C. Moon - Feb. 24, 2025

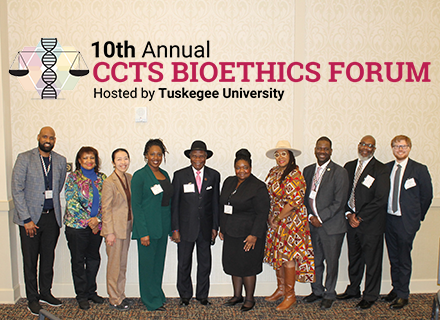

On February 19, 2025, the 10th Annual CCTS Bioethics Forum brought together researchers, bioethicists, students, healthcare professionals, and community members from across the CCTS Partner Network to examine the transformative role of Artificial Intelligence (AI) in healthcare and public health practices. This year's forum was hosted by Tuskegee University and co-sponsored by the Forge AHEAD Center, Tuskegee University, the CCTS, UAB Superfund Research Center, and UAB Research Center of Excellence in Arsenicals. With a theme of "AI in Action: Navigating Ethics and Opportunities in Healthcare and Public Health Practices," the forum explored how AI is being used in medical and public health settings, while tackling critical ethical concerns such as algorithmic bias, data privacy, accountability, and the need for human oversight. The event opened with an overview from Dr. Stephen Sodeke, Bioethicist, Tuskegee University, who welcomed participants and set the tone for the conversations ahead.

On February 19, 2025, the 10th Annual CCTS Bioethics Forum brought together researchers, bioethicists, students, healthcare professionals, and community members from across the CCTS Partner Network to examine the transformative role of Artificial Intelligence (AI) in healthcare and public health practices. This year's forum was hosted by Tuskegee University and co-sponsored by the Forge AHEAD Center, Tuskegee University, the CCTS, UAB Superfund Research Center, and UAB Research Center of Excellence in Arsenicals. With a theme of "AI in Action: Navigating Ethics and Opportunities in Healthcare and Public Health Practices," the forum explored how AI is being used in medical and public health settings, while tackling critical ethical concerns such as algorithmic bias, data privacy, accountability, and the need for human oversight. The event opened with an overview from Dr. Stephen Sodeke, Bioethicist, Tuskegee University, who welcomed participants and set the tone for the conversations ahead.Keynote Address: The Ethical Imperative of AI in Public Health

2025 Bioethics Forum Keynote Speaker, Francesca G. WeaksThe audience engaged in a compelling keynote address by Francesca G. Weaks, DrPH, MCHES, a leading expert in health ethics. Dr. Weaks provided a broad perspective on how AI is shaping public health interventions, clinical decision-making, and patient care, emphasizing the responsibility of researchers and healthcare professionals in ensuring that AI-driven solutions do not exacerbate healthcare imbalances She urged attendees to approach AI development and implementation with a commitment to justice for all, transparency, and fairness.

2025 Bioethics Forum Keynote Speaker, Francesca G. WeaksThe audience engaged in a compelling keynote address by Francesca G. Weaks, DrPH, MCHES, a leading expert in health ethics. Dr. Weaks provided a broad perspective on how AI is shaping public health interventions, clinical decision-making, and patient care, emphasizing the responsibility of researchers and healthcare professionals in ensuring that AI-driven solutions do not exacerbate healthcare imbalances She urged attendees to approach AI development and implementation with a commitment to justice for all, transparency, and fairness.Panel Discussion: Real-World Ethical Considerations in AI

A highlight of the forum was the panel discussion, featuring experts across medicine, public health, AI research, and community engagement. Panel moderator, Dr. Stacy Lloyd, Assistant Professor, Department of Pathobiology, Tuskegee University, and panelists Dr. Ryan Melvin, Associate Professor, Endowed Faculty Scholar for Data Science, Artificial Intelligence, and Machine Learning, UAB School of Medicine, Department of Anesthesiology and Perioperative Medicine; Dr. Carol Agomo, Program Director for Community Outreach and Engagement, Division of General Internal Medicine & Population Science/Forge AHEAD Center, University of Alabama at Birmingham; Mr. Chris Williams, community member and Forge AHEAD CAB member; and Dr. Candy Tate, Museum Curator, Tuskegee University; engaged in a thought-provoking discussion on the ethical challenges of AI applications in healthcare, including:

- AI-driven insurance claim denials: A recent class-action lawsuit underscored how AI-based decision-making in Medicare Advantage claims may harm rural communities. Panelists debated the need for regulatory oversight to prevent unethical use of AI in insurance and healthcare access.

- Bias in AI-driven decision-making: AI systems, often trained on historically biased datasets, risk perpetuating discrimination in medical diagnoses and treatment recommendations. Panelists emphasized the importance of human oversight, transparency, and equitable training data.

- The role of community engagement: Building trust in AI requires inclusive conversations with the populations most impacted. Speakers emphasized collaborative decision-making that includes patients, healthcare providers, and ethicists in AI development.

Lightning Talks: AI Developers in Action

|

Stay Engaged!

|

- Open Knowledge Networks: Researchers from the Alabama Center for Advancement of AI presented a system designed to aggregate federally available healthcare datasets, making complex medical data more accessible and usable for public health and clinical applications.

- AI for clinical research and diagnostics: Dr. Ryan Melvin demonstrated AI-powered tools that assist with literature searches, grant writing, and patient risk assessment—showcasing how AI can streamline healthcare decision-making for medical professionals.

- Ethical considerations in AI-assisted medical diagnosis: Presenters discussed how AI models can enhance efficiency while introducing risks, emphasizing the need for human oversight, accountability, and regulatory safeguards in AI-driven healthcare.

Key Takeaways: Ethical AI for an Equitable Future

Throughout the forum, a recurring theme was how to balance innovation with ethical responsibility. Attendees left with key insights on:- The necessity of transparency and explainability in AI-driven healthcare solutions.

- The importance of regulatory policies that protect patients from AI-driven harm.

- The role of interdisciplinary collaboration between computer scientists, clinicians, ethicists, and community members.

Moving Forward: The Ongoing Conversation on AI in Healthcare

The conversation does not end here—the CCTS invites all stakeholders to continue engaging in future discussions, policy development, and research initiatives to shape an AI-driven healthcare landscape that is both innovative and just. For those unable to attend or who wish to revisit the conversations, we invite you to watch the recordings available on the CCTS's Video channel, and make sure you are subscribed to the weekly CCTS Digest and following the CCTS on LinkedIn to stay informed of all upcoming CCTS events.

- IMG 9235 Large

- IMG 9290 Large

- IMG 9254 Large

- IMG 9224 Large

- IMG 9181 Large

- IMG 9255 Large

- IMG 9282 Large

- IMG 9326 Large

- IMG 9273 Large

- IMG 9202 Large

- IMG 1437 Large

- IMG 9180 Large

- IMG 9337 Large

- IMG 9281 Large

- IMG 9261 Large

- IMG 9306 Large

- IMG 9184 Large

- IMG 9195 Large

- IMG 9234 Large

- IMG 9230 Large

- IMG 9229 Large

- IMG 9178 Large

- IMG 9277 Large

- IMG 9333 Large

- IMG 9267 Large

- IMG 1447 Large

- IMG 9332 Large

- IMG 9298 Large

- IMG 9215 Large

- IMG 1432 Large

- IMG 9308 Large

- IMG 1429 Large

- IMG 9258 Large

- IMG 9196 Large

- IMG 9185 Large

- IMG 1435 Large

- IMG 1439 Large

- IMG 9310 Large

- IMG 9233 Large

- IMG 9348 Large

- IMG 9305 Large

- IMG 9244 Large

- IMG 9199 Large

- IMG 9193 Large

- IMG 9188 Large

- IMG 9231 Large

- IMG 9272 Large

- IMG 9294 Large

- IMG 9275 Large

- IMG 9194 Large

- IMG 9328 Large

- IMG 9208 Large

- IMG 9179 Large

- IMG 9190 Large

- IMG 9204 Large